Photorealistic Synthetic Crowds Simulation in Indoor environments (PSCS-I): a novel synthetic dataset for realistic simulation of crowd panic and violence behaviors

Overview

Accurate crowd behavior analysis is an important yet challenging task for modern surveillance systems, especially in indoor environments where dense crowds, complex layouts, and frequent occlusions can reduce the reliability of the detection of abnormal crowd behaviors such as panic and violence. The progress in this field has been limited by the lack of availability of large-scale, diverse, and high-quality annotated datasets that can effectively capture the complexities that exist in the real world. To address this gap, the PSCS-I dataset is introduced, a photorealistic synthetic dataset generated with the state-of-the-art rendering capabilities of Unreal Engine 5 (UE5). In detail, PSCS-I is the first synthetic dataset to:

- Capture multiple scenes from 26 unique indoor environments with more than 53.8 hours of annotated videos at the frame-level.

- Contain multiple normal (walking and social) and abnormal (mixed running, evacuation, and fighting) crowd behaviors, with each associated with a trigger event (e.g., earthquake).

- Introduce videos captured from Body Worn Cameras (BWC).

- Outperform real-world datasets by mitigating the sim2real gap through the rendering capabilities of UE5 and the utilization of the Enhancing Photorealism Enhancement (EPE) method.

- Significantly challenge the current state-of-the-art crowd behavior analysis methods in multiclass classification.

Methodology

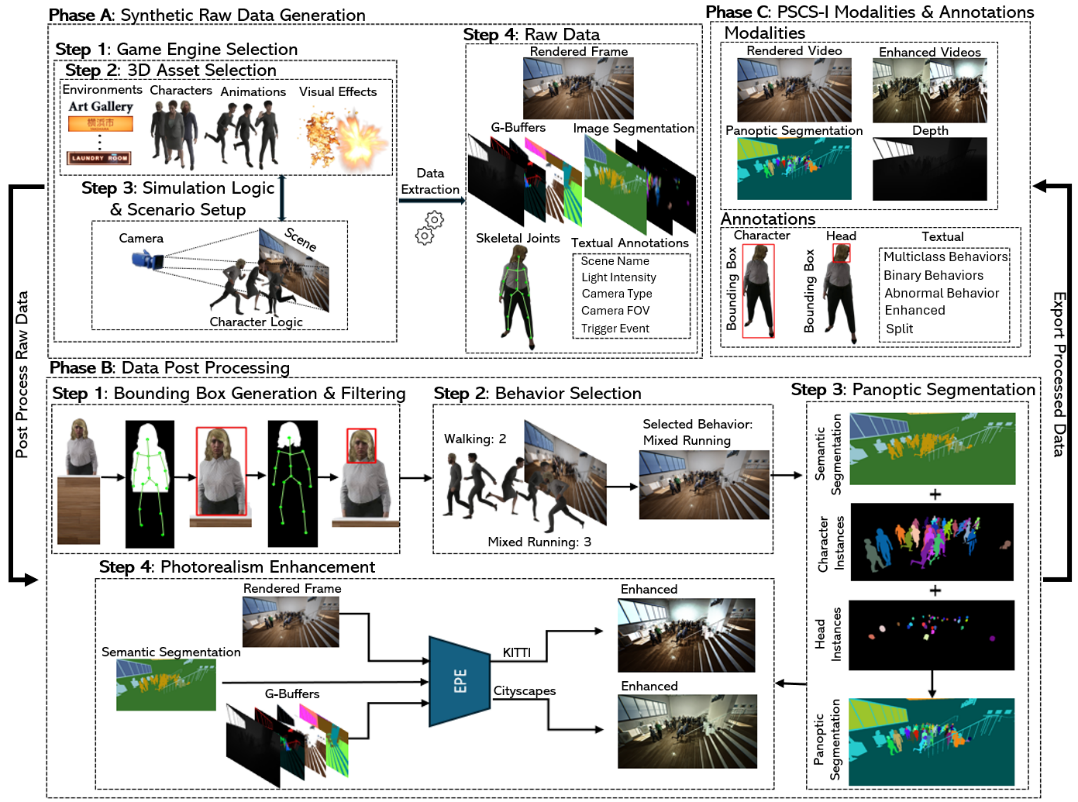

An overview of the proposed methodology for generating the PSCS-I dataset is illustrated in the figure below. In detail, the PSCS-I data generation pipeline is organized into three main phases. “Phase A” Synthetic Raw Data Generation —Step 1 Game Engine Selection: the most suitable of the available game engines (e.g., Unreal Engine, Unity, and CRYENGINE) for the task at hand is selected; Step 2 3D Asset Selection: the 3D assets that will be employed to fill the environments are collected; Step 3 Simulation Logic & Setup: the simulation logic and scenario setup, which include the character behaviors and the environmental configuration, are defined; and Step 4 Raw Data: the simulation is executed, resulting in the extraction of the raw data. “Phase B” Post-Processing—Step 1 Bounding Box Generation & Filtering: the bounding boxes of visible (non-occluded) characters are generated; Step 2 Behavior Selection: the behavior for each of the video frames, based on the behavior of each visible character, is selected; Step 3 Panoptic Segmentation: the semantic and instance segmentation maps are combined into the unified format of panoptic segmentation; and Step 4 Photorealism Enhancement: the video frames are post-processed by EPE, resulting in their photorealism-enhanced variations based on the characteristics of two different real-world datasets (Cityscapes and KITTI). “Phase C” PSCS-I Modalities & Annotations: the final visual modalities and textual data included in the PSCS-I dataset are stored.

PSCS-I Dataset

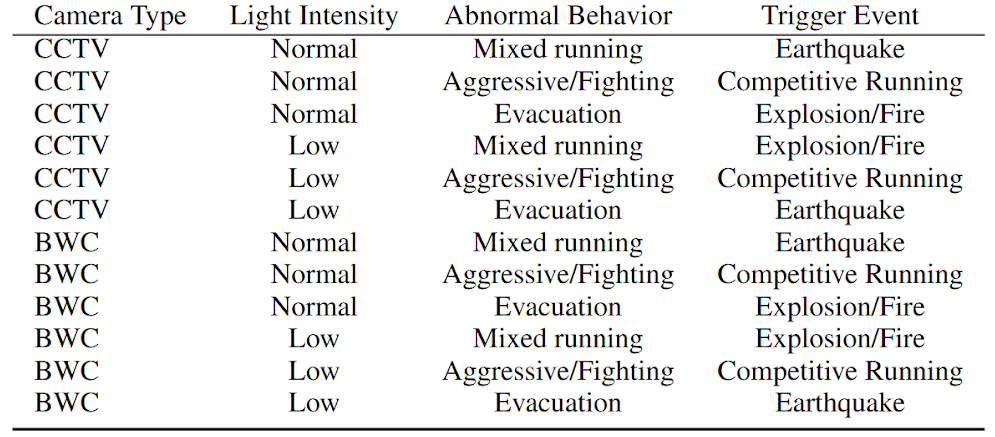

The PSCS-I dataset contains a large-scale collection of videos and high-quality annotations that can support crowd analysis-related tasks, including 647 videos—312 directly rendered in UE5 and 335 photorealism-enhanced videos post-processed through the state-of-the-art image-to-image translation method, EPE. Each video has a duration of 5 minutes and is provided at 1920×1080 resolution and 30 frames per second (FPS) under different camera perspectives (CCTV and BWC), lighting conditions (low and normal), and indoor environments (a total of 26 unique indoor environments). In addition, each video simulates two or more of a total of five supported behaviors, which include both normal and abnormal behaviors: walking and social interaction as normal behaviors, and aggressive/fighting, evacuation, and mixed running as abnormal behaviors. Taking into account that machine learning-based models are highly sensitive to imbalanced data, a balanced distribution was ensured across the camera type, light intensity, and abnormal behavior, except for the trigger events, which were equally distributed among the behaviors to maintain representational fairness, resulting in a combination of 12 scenarios for each environment, as shown in the table below.

The following video demonstrates 8 of the 26 environments included in PSCS-I, as well as the different normal and abnormal behaviors and trigger events that are simulated in PSCS-I.

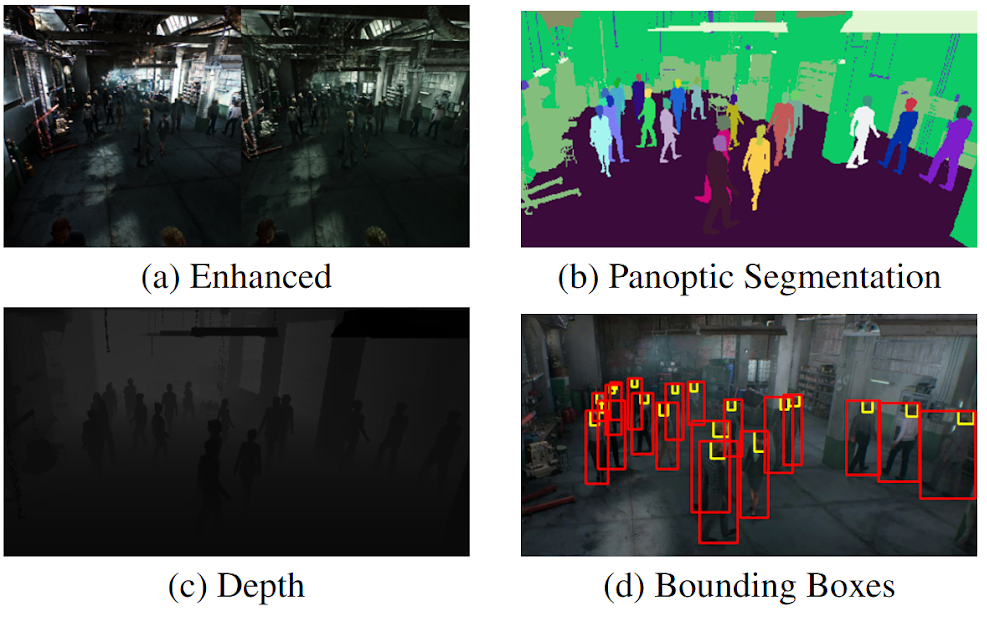

Finally, each video frame of the PSCS-I dataset is accompanied by a rich set of modalities and annotations, including panoptic segmentation maps that enable tasks such as crowd counting and scene understanding, and depth information that is provided as compressed 2D arrays derived directly from the UE5 Z-buffer. The dataset also includes 2D bounding boxes for both the entire character and the character’s head regions, available in standard coordinate format as well as the widely used COCO and YOLO annotation formats. Additionally, textual annotations are provided, including the behavior labels and metadata describing the environment, camera properties, lighting, trigger events, and data splits (i.e., train, validation, and test). Examples of the modalities and annotations included in the PSCS-I are depicted in the following figure.

Dataset and models

Download models and code here.

A sample of the PSCS-I Dataset is publicly available in Kaggle.

The PSCS-I dataset is available for download here. To get the access credentials, fill out the following form, and they will be emailed to you: Request Form

Citation

If you find our work useful in your research, please cite our paper as follows:

Pasios, K. Gkountakos, K. Ioannidis, T. Tsikrika, S. Vrochidis and I. Kompatsiaris, “Photorealistic Synthetic Crowds Simulation in Indoor environments (PSCS-I): a novel synthetic dataset for realistic simulation of crowd panic and violence behaviors,” in IEEE Access, doi: 10.1109/ACCESS.2025.3636727

License

For the creation of PSCS-I, assets were employed from the UE Marketplace/FAB that are depicted in the videos. The dataset was designed for predictive tasks (e.g., image classification and object detection) that solely operate on the original content and produce tags. Based on the FAB EULA, to access the dataset, you have to agree that it will be employed only for such predictive tasks. Below is the related section from the EULA:

“For purposes of this Agreement, “Generative AI Programs” means artificial intelligence, machine learning, deep learning, neural networks, or similar technologies designed to automate the generation of or aid in the creation of new content, including but not limited to audio, visual, or text-based content. Programs do not meet this definition of Generative AI Programs where they, by non-limiting example, (a) solely operate on the original content; (b) generate tags to classify visual input content; or (c) generate instructions to arrange existing content, without creating new content.”

Contact

Stefanos Pasios: pasioss@iti.gr

Konstantinos Gkountakos: gountakos@iti.gr

Konstantinos Ioannidis: kioannid@iti.gr

Theodora Tsikrika: theodora.tsikrika@iti.gr

Related Projects