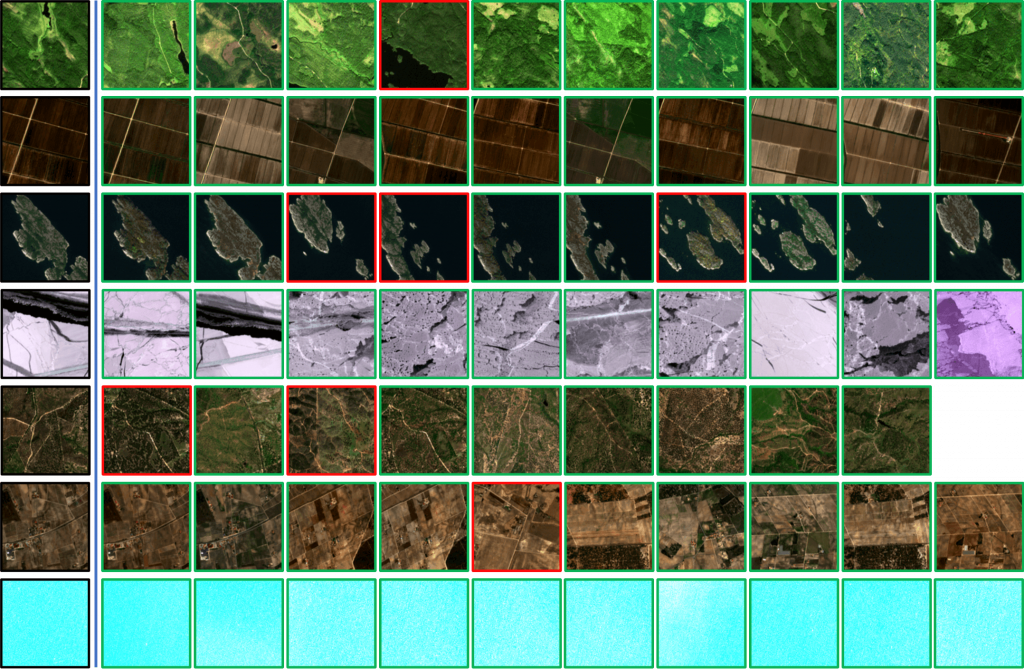

A key requirement in multimodal domains is the ability to integrate the different pieces of information, to derive high-level interpretations. More precisely, in such environments, information is typically collected from multiple sources and complementary modalities, such as from multimedia streams (e.g. using video analysis and speech recognition), lifestyle and heterogeneous sensors. Though each modality is informative on specific aspects of interest, the individual pieces of information themselves are not capable of delineating complex situations. Combined pieces of information on the other hand can plausibly describe the semantics of situations, facilitating intelligent situation awareness. Similarly, social media posts usually contain short text, image captured by a mobile phone, user information, spatial and temporal details that all formulate a social media post. Satellite images oftenwise contain several bands, beyond the optical channels of Red, Green, Blue, and are associated with specific geographic coordinates, timestamps and date. The effective fusion of multiple modalities is a challenging task that may take place at the feature level (early fusion) or at the decision level (late fusion). In this context, our research aims at developing intelligent frameworks for combining, fusing (at different levels) and interpreting observations from a number of different sensors and/or multimedia to provide a robust and complete description of an environment, behavior or process of interest, as well as decision support, analytics and retrieval applications.